Artificial intelligence, or AI, is to language as a calculator is to math. That’s the analogy District 219’s Chief Officer of Technology, Philip Hintz, used when speaking to North Star News about plans to implement AI.

“We don’t want to ban [AI] because it’s going to be here forever,” Hintz said. “We can’t uninvent it, and we want to help you guys to learn how to use it along with everything you do, and we want you to be able to use it responsibly.”

Hintz’s job is to implement AI into classroom learning in District 219, and the first step is to educate teachers on how to utilize this growing technology. The district has subscribed to several teaching resources that use generative AI, including MagicSchool and Brisk. These teaching assistants can create presentations, quizzes, rubrics, and even “teacher puns” with just a few commands.

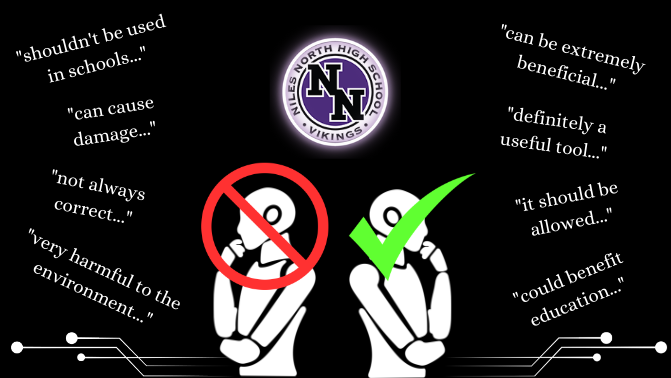

The issue of AI usage at Niles North has been controversial among students and staff, to say the least. While school administrators tend to embrace the usage of AI in schools, teachers and students have shared concerns and mentioned nuances about the implications of this new technology.

“[AI] seems pretty fundamentally different than a calculator because it is,” English teacher James Haberl said. “It’s because we’re talking about ideas, not solutions.”

Haberl fears AI will take away from the root of his subject, replacing students’ authentic thoughts with AI-generated ones.

“I really got involved in teaching because I want to talk about ideas and books and movies with students, and I just really want to know what they think,” Haberl said. “AI creates such a barrier to me seeing what my students actually think about the things that we’re talking about.”

English teacher Barbara Hoff’s main concern is that struggling students will use AI to hide their academic challenges, making it difficult for teachers to identify any issues.

“Maybe [you use AI] because you’re a super duper struggling reader,” Hoff said. “We have lots of great resources at the school that could help a student like that. But if there’s this mask of using AI to respond to things… then it’s just masking the real problem, and they’re not going to get help, because they’re not going to really learn.”

Like Hoff, science teacher Susan Trzaskus worries that AI has the potential to mask a student’s understanding through superfluous language.

“For science, we have Claim-Evidence-Reasoning or CERs,” Trzaskus said. “I actually have gone to all paper-written CERs so that students have the ability to show me what they know. I think they get overly concerned about the phrasing. For science, we just want to know, what do [students] know? AI tends to muddy that up.”

At the beginning of the year, Haberl asked his students how comfortable they would be with AI grading their essays rather than him.

“[My students are] all like, ‘That would be horrible. I would not feel comfortable with that at all. I think you would be much better at grading it,’” Haberl said. “So then why would you trust [AI] to answer [your] questions?”

When it came to teachers creating lesson plans using AI, senior Natalie Ng, a frequent user of the technology, didn’t mind the shortcut.

“I can’t blame them honestly,” Ng said. “I don’t really care. I don’t think it’s less thoughtful, or there’s less input, because sometimes AI can just come up with better questions.”

But when it came to the idea of AI grading English papers, students were far more reluctant.

“I would want my teacher to actually read my [essay] for sure, because AI doesn’t understand, my basic style and my history of writing, like how I usually write my stuff,” senior Johnny Chamoun said.

History teacher Albert Chan wanted to test MagicSchool’s ability to make a quiz based on a Youtube video. He wrote his own set of questions, then plugged in the link to MagicSchool. He was dissatisfied by the program’s poor response.

“My questions were much more specific,” Chan said. “My questions got what I wanted. The AI questions were very based on the captions and like, ‘What’s the name of this person?’ You know? That’s a worthless question.”

Hoff explained how the convenience of AI can hinder creativity when it comes to education.

“There’s people [who are] real excited about [how] AI can generate lessons,” Hoff said. “AI can grade papers for you. But my thing is, so if AI generated the lesson, the students used AI to write the assignment, and then I use AI to grade the assignment, what have any of us done?”

Similarly, Trazkus explained how AI not only limits creativity and learning but also limits meaningful and necessary interpersonal interaction.

“I know that it can grade my CERs for me, but I just feel like, if I let a kid use AI to write the CER, and then I use it to grade the CER, who’s learning and who’s assessing? Nobody!” Trazkus said. “The computer is doing the work for the student, and then for me. What is the purpose, then, of us being together?”

Neither Hoff nor Haberl have used AI in their classes yet, and both say they aren’t planning to anytime soon. However, Chan allowed his freshmen Modern World History students to experiment with the technology by asking ChatGPT about a Columbian Exchange food item. Not only was the assignment a way for the students to learn about using AI, but Chan figured for such a quick assignment, it was appropriate use.

“We didn’t have to go to the library to get the information,” Chan said. “It wasn’t a hard academic research writing assignment. It was just [that] I needed them to get the information. And I thought it would be a cool way to find out more about their favorite food.”

Many students have used AI, with discretion, to help in school. Junior Uruj Hazara has used the technology to help her understand science concepts better.

“Most of the time I ask it about a question,” Hazara said. “If I just need the guidance or the first step, I’m like ‘Can you help me figure out this problem?’ Or, like, ‘Don’t tell me answers.’ Or sometimes I just need to see the answers, see if I got it right.”

Math teacher Ryan Murphy explained the nuances of using AI through different types of math classes.

“In Stats, when you’re trying to generate questions for a project or understand how a project works, using AI gives you a really good summary,” Murphy said. “AI can be a hindrance in Algebra 2, when students use it just to complete assignments, or when AI gives back a response that is something from calculus or a much higher level mathematics, where it actually confuses students more. Students that use [AI that way] look like they’re cheaters when they’re really trying to be learners and they’re not sure how to use AI appropriately.”

Hintz acknowledges that using AI is not necessary or appropriate for every single assignment. Working alongside department heads, he has developed a system for showing students how AI can be used in certain instances. Next semester, a document that informs students when it’s appropriate for students to use AI will be released in all classrooms.

Hintz has also developed the acronym H.A.H, or Human-AI-Human, to help students understand how to use AI—and to understand that AI isn’t always correct. For example, he says students can type in their questions or commands to an AI tool, but they have to double-check the answers with more research.

Senior Said Zukanovic uses AI to help with smaller tasks when it comes to school, such as breaking down dense texts for science class and assisting in review worksheets. But no matter the task, he makes sure to confirm the information is correct.

“I always go back and find the article myself, just to also make sure that what the AI is saying is correct,” Zukanovic said. “More often than not, it is correct. But that’s just because this technology is evolving. Two years ago, it had definitely a higher chance of being incorrect.”

Both Hintz and Haberl agreed that the technology is unable to say “I don’t know,” so even if it doesn’t know the answer to a question, it will still give some sort of answer, accurate or not. Moreover, systems like ChatGPT violate the principle of equity, Hintz says. Experimenting with the technology, he typed in a question to the platform. Some of the sources given, though, didn’t exist when he googled them. But after subscribing to the premium version of ChatGPT (which costs $20 per month), the sources to the same question were different.

“It gave me real resources and real references and everything, and it gave me real good information,” Hintz said. “So my fear is it’s not equitable.”

Despite the hesitations from teachers and students, D219 is choosing to embrace the change. Hintz quotes Dr. Karim R. Lakhani from the Harvard Business School:

“AI will not replace students, but students that use AI could replace students without AI,” Hintz said. “Teachers will not be replaced by AI, but teachers who use AI could replace teachers who don’t.”

Ms. Amelianovich • Dec 16, 2024 at 11:13 am

EXCELLENT article!